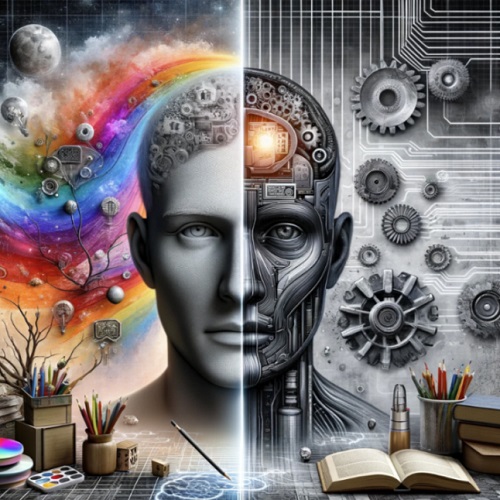

Modern AI can produce amazing things. A study has now compared the creativity of humans and artificial intelligence AI – and found hardly any differences. So are machines just as imaginative as humans?

Creativity is considered to be something very human. But with new programs like ChatGPT, the question arises as to whether artificial intelligence can produce a certain amount of new ideas. The programs can already write poems, think up jokes, create images and compose pieces of music.Universities and schools are already fearing a wave of computer-generated term papers and theses.

Same results in creativity test

Researchers at the Humboldt University of Berlin (HU) and the University of Essex have now conducted a preprint study to examine how creative AI is compared to humans. They had 100 humans and six generative AI programs complete a creativity test. The result: Overall, there were hardly any differences between humans and machines.”The study shows that chatbots that are asked the same simple question as humans generate more ideas that are, on average, just as original as those of humans,” the paper says.

“That didn’t really surprise us,” says author Jennifer Haase from the HU. “Because the programs are now really very good in the area of everyday creativity.” specifically, it was about the”Alternative Uses Test” (AUT ). This involves asking for other possible uses for everyday objects such as a toothbrush or a paper clip. For example, the latter could also be used as a replacement part for a broken zipper or as an earring. The more original the answers , the higher the result was rated – by six examiners and a special AI.

“This is a very frequently used procedure,” says psychologist and creativity researcher Joachim Funke to tagesschau.de .Of course, the test can only cover a small area. “But creativity is simply very difficult to grasp – that’s why people like to resort to such tests.”However, some details of the test are interesting: For example, that almost ten percent of the people in the test were more creative than any AI.

Little-C and Big-C

Antonio Krüger, director of the German Research Center for Artificial Intelligence, also supports this assessment. “What programs can produce today is probably considered creative by most people. What they cannot do, however, is break new ground in the abstract, because the architecture of the programs is not suitable for that.” The human brain is much more complex and therefore capable of more unusual things – and that will remain the case in the long term.

Another important difference is that programs always need an external stimulus to become creative. “People also just create things on their own and therefore find it easier to find a way out when they reach a dead end. Algorithms cannot do that; they always need a stimulus,” says Krüger.

Researchers distinguish between different types of creativity: Little-C, for example, which is the ability to solve everyday problems in an imaginative way, and Big-C, where something completely new is created that has an impact on society. For these top performances – and this is also suggested by the study – people are needed, says Funke. “Because programs do not have the whole emotional world that leads to great works. The motivation from which creativity takes place is therefore completely different: people have an intrinsic motivation . And that is important for the evaluation of creative performance, even if the end result sounds or looks similar.” artificial intelligence AI

Different process, similar result

The study authors also emphasize that one cannot generally conclude that AI is just as creative as humans. However, an important finding is that AI can achieve results in the area of everyday creativity that can keep up with those of many people. However, the more complex the tasks become, the more problems the artificial intelligence AI will encounter.

Another important result is that the statement that chatbots only combine things that are already known in new ways is no longer valid.”These programs achieve astonishing results in a setting in which many people are also present. They produce things that many people consider creative, even if the process behind them is completely different,” says Haase.

AI as a creativity tool

The study results therefore also suggest that AI may well take on individual creative tasks in the future. This applies to areas in which it already achieves very good creative results, such as the design or storytelling of computer games.

Krüger emphasizes that people can take advantage of the creativity of the programs. “They are a very good tool for initiating or developing ideas.” Especially since individual programs are getting better and better in specific very areas – such as image design or text. Therefore , he does not rule out the possibility that AI will also demonstrate real creativity at some point. “But it will be some time before that happens – until then I see no danger of displacement on a broad scale.”

Is it possible to achieve computational creativity? The recent excitement around generative artificial intelligence (AI) tools like ChatGPT, Midjourney, Dall-E, and others, prompts new inquiries about whether creativity is an exclusively human capability. Various recent and exceptional achievements of generative AI raise this question:

In 2018, an AI-generated artwork, The Portrait of Edmond de Belamy, was sold by Christie’s auction house for $432,500, almost 45 times its highest estimate. The artwork was produced by a generative adversarial network fueled by a dataset of 15,000 portraits spanning six centuries.

Music producers such as Grammy-nominee Alex Da Kid have collaborated with AI, specifically IBM’s Watson, to create hits and inform their creative process.

In the mentioned cases, a human still plays a significant role, shaping the AI’s output according to their own vision, thus maintaining authority of the piece. However, for instance, the AI image generator Dall-E is capable of swiftly producing original output on any desired theme. Through diffusion, which involves pooling vast datasets for AI training, generative AI tools now have the ability to transform written phrases into unique images or improvise music in the style of any composer, creating new content that resembles the training data but is not identical.

Authorship becomes more intricate in this context. Is it the algorithm? The thousands of artists whose work has been used to create the image? The prompter who effectively describes the style, reference, subject matter, lighting, perspective, and even evoked emotion? Understanding These questions require revisiting an age-old question.

What constitutes creativity?

According to Margaret Boden, creativity encompasses three types: combinational, exploratory, and transformational. Combinational creativity combines familiar ideas. Exploratory creativity generates new ideas by exploring ‘structured conceptual spaces,’ modifying an accepted thinking style by exploring its contents, limits, and potential Both of these creativity types bear some resemblance to generative AI’s algorithmic art production; creating unique works in the same style as millions of others in the training data, a form of ‘synthetic creativity.’

Transformational creativity, however, involves generating ideas beyond existing structures and styles to create something entirely original; this lies at the heart of current debates surrounding AI in terms of fair use and copyright – a largely uncharted legal territory, so we will have to wait and see what the courts decide.

The defining characteristic of AI’s creative processes is that current computational creativity is systematic, as opposed to impulsive, like its human counterpart. It is programmed to process information in a certain way to reliably achieve specific results, yet often in unexpected ways. This is controversial the most significant difference between artists and AI: while artists are self- and product-driven, AI is very much consumer-centric and market-driven – we only get the art we request, which might not necessarily be what we need.

Generative AI appears to function most effectively when collaborating with humans, and perhaps the synthetic creativity of AI serves as a catalyst to enhance our human creativity, rather than replace it. As often is the case, the excitement around these tools as disruptive forces exceeds the reality. Indeed, art history shows us that technology has rarely directly humans from work they sought to replace do. Take the example of the camera, which initially caused concern due to its potential to put portrait painters out of business. What are the business implications for the use of synthetic creativity by AI, then?

Synthetic art for business

On-demand synthetic creativity, as currently generated by AI, is unquestionably advantageous for business and marketing. Recent instances include:

- AI-enhanced advertising: Ogilvy Paris employed Dall-E to produce an AI iteration of Vermeer’s The Milkmaid for Nestle yogurts.

- AI-designed furniture: Kartell, Philippe Starck, and Autodesk collaborated with AI to design the first chair using AI for sustainable manufacturing.

- AI-augmented fashion styling: Stitch Fix utilized AI to create personalized visualizations of clothing based on specific customer preferences such as color, fabric, and style.

The potential application scenarios are vast and they necessitate another form of creativity: curation. AI has been known to ‘hallucinate’ – an industry term for producing nonsensical output – and the necessary distinctly human skill lies in sense-making, which involves articulating concepts, ideas, and truths, rather than merely pleasing the senses. Curation is consequently essential for selecting and presenting, or reimagining, a cohesive and compelling vision.

There is tremendous concern about the potential of generative AI—technologies that can create new content such as text, images, and video—to replace people in many jobs. However, one of the most significant opportunities generative AI presents is augmenting human creativity and overcoming the challenges of democratizing innovation.

Over the past twenty years, companies have utilized crowdsourcing and idea competitions to engage external parties in the innovation process. However, many businesses have faced challenges in leveraging these contributions. They have struggled with effectively assessing the ideas and integrating disparate ideas, for example.

According to the authors, generative AI can help address these difficulties. It can complement the creativity of employees and customers, aiding them in generating and specifically identifying innovative ideas, and enhancing the quality of initial ideas. More, companies can employ generative AI to stimulate divergent thinking, counteract bias stemming from expertise, aid in idea evaluation, facilitate idea refinement, and promote collaboration among users.

While there is significant concern about generative AI’s potential to replace human workers in various roles, one of the most significant opportunities it presents for businesses and governments is to enhance human creativity and address the obstacles to democratizing innovation.

The concept of “democratizing innovation” was coined by Eric von Hippel of MIT, who has been researching and writing about the potential for users to develop their required products and services since the 1970s. Over the last two decades, the idea of deeply involving users in the innovation process has gained traction, with companies currently using crowdsourcing and innovation contests to generate numerous new ideas. However, many enterprises struggle to capitalize on these contributions due to four primary challenges.

First, efforts to democratize innovation may lead to an overload of evaluation. For instance, crowdsourcing may result in an overwhelming number of ideas, many of which are ultimately discarded due to companies lacking an efficient way to evaluate or combine incomplete or minor ideas that could be potent in combination.

Second, companies may be susceptible to the curse of expertise. Domain experts, who excel at generating and recognizing feasible ideas, often struggle with generating or accepting novel ideas.

Third, individuals lacking domain expertise may identify novel ideas but may be unable to provide the necessary details to make the ideas possible. They are unable to translate messy ideas into coherent designs.

Finally, companies struggle to see the big picture. Organizations focus on amalgamating a multitude of customer requirements but face challenges in producing a comprehensive solution that appeals to the larger community.

Generative AI tools can address a significant challenge in idea contests: consolidating a large number of ideas to create much stronger ones.

The authors’ research and experience with various entities, including companies, academic institutions, governments, and militaries, on numerous innovation projects—some generative AI and some not—have shown that this technology can help organizations overcome these challenges. It can enhance the creativity of employees and customers, helping them generate and identify innovative ideas, and improve the quality of initial ideas. They have observed the following five ways:

1. Stimulate Divergent Thinking

Generative AI can promote divergent thinking by creating associations among distant concepts and generating ideas stemming from these associations. Here’s an example of how we used Midjourney, a text-to-image algorithm capable of identifying analogical resemblance between images, to produce novel product designs based on textual prompts from a human. (For the examples in this article, we used Midjourney, ChatGPT, and Stable Diffusion, among other available generative AI tools.) We tasked Midjourney with creating an image that merges an elephant and a butterfly, resulting in the creation of the “phantafly.”

We then utilized the detailed rendering from Midjourney to inspire prompts in Stable Diffusion, another popular text-to-image model. Stable Diffusion generated a variety of ideas for different product categories, such as chairs and artisanal chocolate candies (see images below).

This rapid and cost-effective production of a multitude of designs allows a company to swiftly evaluate a wide range of product concepts. For example, a clothing company employing generative AI to create new T-shirt designs could remain up-to-date with trends and offer a constantly evolving selection of products to its customers.

Consider another instance of how this technology can connect ideas to generate concepts that an individual or team might not have conceived on their own. We used ChatGPT, a form of generative AI known as a large language model, to guide idea generation. We tasked it with generating ideas using a process of trisociation by linking three distinct entities (an extension of the bisociation creativity technique). Our team presented ChatGPT with the following prompt: “You will play the role of an ideator. You will randomly generate 10 common nouns.

You will then randomly select any two of the 10 nouns. Following that, you will request a third noun from me. Subsequently, you will come up with a business concept by combining or associating the two nouns you chose, along with the noun I provided .

ChatGPT generated the nouns “food” and “technology.” When asked, we supplied the additional noun “car.” In response, ChatGPT formulated this business concept promptly: “A smart food-delivery service that utilizes self-driving cars to transport meals to customers. The technological aspect may involve employing AI to optimize delivery routes, monitor food temperature in real time, and offer customers real-time updates on their orders. The service could target busy professionals and families seeking convenient and healthy meal options without compromising taste and quality.”

In a separate instance, ChatGPT presented the nouns “airline” and “chair.” Upon prompting, we contributed “university,” and ChatGPT devised a business idea that offers a convenient, cost-effective means for students and academics to travel to conferences and workshops globally, combined with access to a library of educational books during the flight. It suggested that the company could be named Fly and Study or Edu-Fly.

2. Challenge Expertise Bias

At the initial stages of new-product development, unconventional designs created by generative AI can inspire designers to think beyond their preconceived notions of what is possible or desirable in a product, both in terms of form and function. This approach can lead to solutions that humans may not have conceived using a traditional approach, where functions are established first and the form is subsequently designed to accommodate them.

Such inputs can help counter biases such as design fixation (reliance on standard design forms), functional fixedness (inability to imagine a use beyond the traditional one), and the Einstellung effect, where individuals’ prior experiences hinder them from considering new problem-solving methods.

Here is an example of this process. We tasked Stable Diffusion with generating generic designs of crab-inspired toys without providing any functional specifications. Subsequently, we envisioned functional capabilities after reviewing the designs. For instance, among the collection of crab-inspired toys displayed below, the image in the top left could be developed into a wall-climbing toy, while the adjacent image could function as a toy that launches a small ball across a room. The crab on a plate near the center could be transformed into a slow -feeder dish for pets.

This is not an entirely new approach to creating unique products. Much of the architecture and ride in theme parks like Disney World has been influenced by a desire to recreate scenes and characters from a story. However, generative AI tools can serve as a catalyst for a company’s imaginative designs.

3. Assist in Idea Evaluation

Generative AI tools can aid in various aspects of the early stages of innovation, including enhancing the specificity of ideas and evaluating ideas, and at times, combining them. Let’s consider an innovation challenge focused on identifying ways to minimize food waste.

ChatGPT evaluated the advantages and disadvantages of three raw ideas: (1) packaging with dynamic expiration dates – labels that automatically change dates or colors based on environmental conditions; (2) an app to facilitate food donations; and (3) a campaign to educate people about expiration dates and their significance in terms of freshness and suitability for use. ChatGPT provided a balanced analysis of the pros and cons that resembled what one might expect from a discussion between two individuals discussing the merits of such ideas.

For instance, when ChatGPT assessed the concept of dynamic expiration-date packaging, it concluded that it would help consumers understand product shelf life better and prompt food manufacturers to produce smaller, more frequently replenished batches for grocery shelves. Additionally, ChatGPT highlighted that dynamic expiration dates might necessitate substantial changes to the manufacturing and packaging process, potentially increasing costs for both manufacturers and consumers.

Regarding the food-donation app, ChatGPT found that it could encourage people to utilize their food before it spoils and reduce food waste by donating unopened, edible food to those in need. However, it cautioned that the app might require a large user base to be effective and that transportation and distribution of food from various unregulated sources could pose safety concerns.

Generative AI’s greatest potential is not in replacing humans but rather in assisting humans to create innovative solutions.

The benefits of educating consumers on expiration labels included boosting consumer awareness of the meaning of different expiration labels and helping them make more informed decisions about food purchases and waste. However, ChatGPT cautioned that this educational program might be overly complicated due to the lack of standardization in expiration dates across different food products. It also warned about the potential high costs associated with educating users on various expiration dates, especially if the program involves widespread campaigns or educational materials.

Generative AI has the capability to help humans assess aspects of creativity such as novelty, feasibility, specificity, impact, and workability. We requested ChatGPT to evaluate the same examples utilizing these criteria.

Here is its evaluation of the dynamic expiration-date packaging concept:

Uniqueness: Moderately original. While there have been efforts to create smart packaging that can extend the shelf life of food products, the concept of dynamic expiration dates is still emerging.

Feasibility: Implementation may be challenging as it requires the development of new packaging materials and technologies. It also necessitates collaboration with food manufacturers, regulators, and distributors.

Specificity: It’s specific by addressing the issue of food waste, providing consumers with more accurate expiration dates for their food.

Impact: It could have a significant impact in reducing food waste and enhancing food safety. By receiving more accurate expiration dates, consumers would be more likely to consume their food before it spoils.

Workability: This would likely require substantial resources and expertise to develop and implement and may take several years to fully achieve its potential.

Here is ChatGPT’s assessment of the app for donating food close to expiration:

Uniqueness: Not particularly innovative. There already exist many programs and organizations dedicated to reducing food waste and donating surplus food to those in need.

Feasibility: Highly feasible. There are numerous established models and an existing infrastructure to support food donation efforts.

Specificity: It is specific in aiming to reduce food waste and address issues of food insecurity.

Impact: It has the potential to make a significant impact by addressing both food waste and hunger. Redirecting surplus food to those in need can help reduce food waste and also improve food security for vulnerable populations.

Workability: Highly workable as it can be quickly implemented at a relatively low cost. It would require partnerships with food manufacturers, distributors, food banks, as well as volunteers to collect and distribute the donated food.

Finally, here is how ChatGPT evaluated the idea of educating users on different types of expiration dates:

Uniqueness: Not particularly innovative. Efforts have already been made to educate consumers on the meaning of expiration dates and on how to properly store food to extend its shelf life.

Feasibility: Highly feasible. It can be implemented through various means, such as public awareness campaigns, educational materials, and media outreach.

Specificity: Specific in aiming to educate consumers on the different expiration dates and how to properly store food to reduce food waste.

Impact: Potentially significant. Educating consumers about the different expiration dates and proper food storage can make them more mindful of food waste and prompt them to take steps to reduce it.

Workability: Highly workable and can be implemented at a relatively low cost. It would require collaboration with food industry groups, government agencies, and other organizations to develop and distribute educational materials.

Utilizing ChatGPT’s assessments, these three concepts could be relatively easily evaluated, or swiftly scored or organized based on the most important criteria.

Support Idea Refinement:

Generative AI tools can address a critical challenge encountered in idea contests: amalgamating or merging numerous ideas to generate much stronger ones. For instance, an organization seeking to secure a contract or grant to develop a food-waste-reduction program can use these tools to combine the three concepts discussed earlier. We accomplished this in two stages. Initially, we asked ChatGPT to further elaborate on each of the concepts:

“The dynamic expiration dates concept is a solution designed to reduce food waste. It involves integrating technology into packaging that can track and adjust the shelf life of products in real time. By using sensors, the expiration date can be updated based on changes in environmental factors, providing consumers with a more accurate estimate of when a product will go bad.”

“Providing an app that allows individuals to donate food nearing expiration in an effort to minimize food waste by offering unopened, consumable food to those in need. The app connects people with excess food to local organizations that can utilize it. Through the use of technology to facilitate food donations, the app can aid in cutting down food waste while also catering to those in need.”

“Teaching users about various types of expiration dates can help them make informed choices regarding purchasing and consuming food, thus reducing waste and saving money. Through clear explanations of terms such as ‘best before,’ ‘use by,’ and other expiration labels, a campaign aimed at educating users can assist them in better understanding how to determine the safety of food and when to dispose of it.”

Subsequently, we requested ChatGPT to unite all three concepts into a single food-waste program. It swiftly created the subsequent description: “The comprehensive food-waste-reduction solution entails developing intelligent packaging with dynamic expiration dates, establishing a surplus food donation program, and educating the public about the significance of understanding food expiration dates.

The intelligent packaging assists consumers in making informed decisions about food freshness, the donation program ensures surplus food is used effectively, and education raises awareness about the implications of food waste. This solution has the potential to significantly cut down on food waste and contribute to a a more sustainable future by empowering consumers, collaborating with industry and government, and making more effective use of surplus food.”

Foster Collaboration with and Among Users

In the process of new product concepts or designs, generative AI can aid in fostering collaborations between a company’s designers and potential users of a product and among the users themselves. In essence, the technology streamlines the co-creation of new developing offerings, making it simpler and more cost-effective. For instance, a company can provide users with tools to create designs and then produce a personalized version of the product based on the customer’s preferences. Alternatively, users’ designs could be shared on a crowdsourcing platform for evaluation by the community. The top designs could then be further developed by additional collaborators.

Human creativity knows no bounds. However, the impediment of effectively communicating their ideas in written or visual form prevents numerous individuals from contributing new ideas. Generative AI can eliminate this barrier. Like any genuinely innovative capability, there will undoubtedly be resistance to it. Established innovation processes will need to evolve. Those with vested interests in the traditional approach—especially those concerned about becoming obsolete—will resist. Yet, the benefits—the opportunities to significantly increase the number and novelty of ideas from both within and outside the organization— will make the transition worthwhile.

The greatest potential of generative AI lies not in replacing humans but in assisting humans in their individual and collective efforts to generate previously unimaginable solutions. It can truly democratize innovation.

The rise of artificial intelligence (AI) has introduced a new dimension to the creative process, enabling artists to explore uncharted territories and push the limits of their imagination. This blog post delves into how AI can serve as a valuable ally for artists, presents a general method for artists seeking to integrate AI into their creative work, and illustrates the approach with a specific case study.

I. AI’s Role in the Creative Process

Artists have perpetually sought innovative methods to express their ideas, and AI is now empowering them to achieve precisely that. Whether one is a painter, musician, writer, or any other type of creative, AI holds the potential to enhance the artistic journey in myriad ways:

Inspiration and Idea Generation: AI can scrutinize extensive data and generate distinctive concepts that may ignite the spark for an artist’s next masterpiece. It has the ability to forge unexpected connections between diverse concepts, fueling the creative spark of the artist.

Visual Exploration: For visual artists, AI-generated images can act as starting points for new creations. AI algorithms can devise abstract patterns, transform images, or produce unique compositions that artists can integrate into their work.

Music Composition: Musicians can harness AI to compose melodies, harmonies, and even complete pieces of music. AI is adept at analyzing existing compositions and developing original musical ideas, granting artists the freedom to experiment with new genres and styles.

Textual Creativity: Writers and poets can benefit from AI-generated text prompts, which can kick-start the writing process. AI has the ability to generate sentences, ideas, or even entire paragraphs that serve as springboards for crafting engaging narratives.

Yet, working with AI presents a unique set of obstacles that artists must navigate. While AI can be a potent tool, finding the right balance between human intuition and technological support remains a delicate challenge. Key difficulties include:

Preserving Artistic Authenticity: One of the primary obstacles is maintaining the artist’s distinct voice and authenticity. AI-generated content might overshadow the creative process at times, leading to a loss of the artist’s individuality and emotional depth in the final artwork.

Avoiding Over-reliance on AI: Depending excessively on AI-generated elements can impede an artist’s own creative skills. Artists may begin to rely too heavily on AI for ideas, stifling their ability to innovate and think critically.

Managing Skill Development: Embracing AI may tempt artists to skip traditional skill-building processes, potentially resulting in a decline in manual techniques and artistic proficiency over time.

Underestimating Artistic Intuition: AI-generated content is rooted in patterns and data, often missing the intuitive leaps and creative insights that artists make. This can lead to artworks that lack the spontaneity and imaginative leaps unique to human creativity.

Blurring of Boundaries: The distinction between the artist’s creation and the AI’s contribution can become ambiguous. Artists must grapple with ethical concerns related to authorization and ownership of AI-generated content. Determining who owns the rights to AI-enhanced artwork can be complex, especially when AI generates significant portions of the composition. How can artists reap the benefits without becoming overly dependent on technology? Let’s dive in.

II. A Generalized Approach

The challenges of using AI in art highlight the complex interplay between technological innovation and artistic expression. As artists embrace AI as a creative tool, they must navigate these challenges with mindfulness and creativity, finding ways to harness the benefits of AI while preserving their unique artistic identity and emotional resonance.

Bottomline: The transformative potential of artificial intelligence within the artistic domain is not about replacing the artist’s touch but rather enhancing it. Despite AI-specific challenges, there are strategic approaches to navigate the uncharted waters of AI-enhanced artistic expression without sacrificing the human touch . When working with AI, artists could use the following approach:

Identify Your Goal: Determine the specific aspect of your creative process that you’d like to enhance using AI. Whether it’s generating ideas, creating visuals, composing music, or something else, defining your objective is the first step.

Choose the Appropriate AI Tool: Various AI tools and platforms are tailored for different creative domains. Research and select the AI tool that aligns with your artistic goals. For example, artists might consider tools like Google’s DeepDream for image manipulation or Jukedeck for music composition.

Generate AI-Enhanced Content: Once you’ve chosen your AI tool, start creating AI-enhanced content. For example, if you’re a painter, experiment with AI-generated images that can serve as the foundation for your artwork.

Incorporate AI Output: Integrate the AI-generated content into your creative work. This could involve blending AI-generated visuals into a traditional painting or weaving AI-generated melodies into a musical composition.

Iterate and Collaborate: Don’t hesitate to experiment with multiple iterations of AI-generated content and integrate feedback from peers or mentors. Collaboration can help refine your creative vision and ensure a seamless integration of AI elements.

Add Your Artistic Touch: While AI can enhance the creative process, remember that your artistic touch remains crucial. Use AI-generated content as a foundation, and then infuse it with your unique style, emotions, and personal narrative.

Let’s see this method in action with a concrete case study.

III. A Case Study: Emily’s Forest Painting

Step 1: Clarifying the Objective

Emily, a budding visual artist, was excited to infuse her work with new ideas. She aimed to experiment with abstract patterns that could introduce a new dimension to her traditional paintings. By clarifying her objective, Emily set a clear direction for her exploration of AI -generated content.

Step 2: Choosing the Right AI Tool

After exploring various AI tools, Emily decided on Google’s DeepDream for its capability to create surreal and abstract images. DeepDream’s algorithm uses neural networks to enhance and modify images, making it an ideal fit for Emily’s goal of creating unique visual patterns that she could integrate later into her art.

Step 3: Creating AI-Enhanced Content

Armed with DeepDream, Emily began the process by choosing a tranquil image of a forest scene as her starting point. She uploaded the image to the AI tool and used the following prompt: “Transform this forest image into a mesmerizing array of colors and shapes that can inspire my next painting.” The resulting version bore little resemblance to the original. The once serene forest became a kaleidoscope of vibrant colors and intricate patterns.

Step 4: Incorporating AI Output

After receiving the AI-generated image, Emily readied her canvas. She utilized acrylic paints in a color scheme inspired by the vibrant hues of the AI output. Leveraging her expertise as a traditional painter, Emily painstakingly replicated the dreamlike patterns onto the canvas. carefully chose brushes and techniques to capture the essence of the AI-generated image while maintaining her unique artistic flair.

Step 5: Revising and Working Together

As Emily’s painting progressed, she shared her developments with fellow artists and mentors. The collaborative feedback proved immensely valuable in helping her address challenges and make well-informed decisions regarding which patterns to highlight. The input she received not only honed her artistic decisions but also validated the innovative direction she was pursuing.

Step 6: Infusing Her Artistic Style

Throughout the process, Emily consistently infused her work with her emotions and viewpoint. She harmonized the AI-generated patterns with her artistic approach, ensuring her own artistic voice remained prominent. This amalgamation of human creativity with AI-generated content brought about a genuinely collaborative painting between artist and machine.

Final Remarks

Emily’s artistic journey culminates in a mesmerizing painting that merges AI-generated abstract patterns with her distinctive artistic style. The final artwork portrays a serene forest scene transformed into a burst of lively colors and intricate shapes. The abstract patterns, inspired by the AI-generated content, flow naturally within the composition, evoking a dreamy atmosphere that resonated with viewers.

The painting conveys a sense of innovation while staying true to Emily’s emotional depth and personal narrative. Here, the collaboration between artist and machine strikes the right balance between the vivid AI-inspired patterns and the nuanced brushstrokes that bear Emily’s signature artistic.

As viewers engage with the artwork, they are drawn into a world where technology and tradition intersect. This example serves as proof of the endless possibilities that emerge when artists embrace AI as a tool to elevate their creative expression. Emily’s journey not only broadens her artistic horizons but also showcases the transformative potential of AI in the realm of visual art.

Ultimately, when merging AI-generated innovation and human ingenuity, artists can encourage audiences to contemplate the interplay between art, technology, and the limitless bounds of imagination.

AI Vs. Human-Made Art: Key Points of Comparison

Since Artificial Intelligence began producing artworks, it has become a widely discussed topic. The higher the amount collectors are willing to spend on these artificially created pieces, the more attention they receive. Here are some top characteristics that differentiate AI art from traditional art and spark discussion:

1. Aesthetics

The main issue is that not everyone appreciates AI-generated creations aesthetically. For example, GANism paintings that feature human-like characteristics are admired by some but create an unsettling feeling for others, known as the uncanny valley effect. An example of such AI art is Robbie Barrat’s nude portraits.

However, many traditional artworks have also faced criticism but eventually made an impact on art history. Some well-known examples include:

- Quinten Massys – The Ugly Duchess

- Francis Bacon – Three Studies of Lucian Freud

- Francisco Goya – Saturn Devouring One of His Sons

- Pieter Bruegel the Elder – The Beggars

- Frida Kahlo – My Birth

In both their traditional and AI-generated form, bold statements are not uncommon in the art world. Some are widely appreciated, while others take time to gain recognition. Overall, aesthetics are highly subjective and cannot be generalized.

2. Effort

Another important factor when comparing AI art and human-created art is the level of effort put into the creation of a piece.

Art enthusiasts often compare the years of hard work that go into creating a traditional painting, such as Leonardo da Vinci’s Mona Lisa, with the short time required for an AI algorithm to generate multiple artworks. However, in terms of time investment, this is not always accurate. For example, the renowned artist van Gogh produced an average of one painting a day in 1890 in Auvers-Sur-Oise.

3. Value

Some argue that because an algorithm lacks emotions, empathy, and thoughts, it cannot create art with the same sentimental value as a human. After all, machines do not face the constraints and challenges that many human artists do.

However, an opposing viewpoint suggests that the algorithm itself can be considered a work of art. This concept makes AI art more relatable to the audience, viewing the technology as a tool with a high degree of autonomy in the hands of a human creator.

Verdict: Can AI Art Fully Substitute Human-Made Paintings?

Art has always been a highly subjective matter, with beauty lying in the eye of the beholder. Furthermore, rapid technological advancements are bringing to life ideas that seemed unimaginable a decade ago. Therefore, making a definitive prediction about the future of AI and human- made art is nearly impossible.

However, there are certainties. The use of AI in creative fields is an irreversible trend, leading to the expectation of more advanced algorithms and their results.

Simultaneously, recent global events have once again highlighted the importance of human values. examined, many individuals seek a representation of profound emotions in art.

As a result, it is likely that AI-generated and human-made creations will progress together and become distinct niches within the same industry, inspiring and complementing each other rather than competing.

artificial intelligence creativity

artificial intelligence creativity

artificial intelligence creativity

artificial intelligence creativity