Artificial intelligence can also be used to plan travel routes and bundle tips for tourists. The industry is following the trend closely. But how does it work in practice? A city guide tried it out.

Brent Foster is curious. The Californian has been working as a city guide in his adopted home of Hamburg since 2010 – he knows the city inside and out. But in the age of ChatGPT, his job could soon be under threat.Tourists who come to Hamburg can also use the artificial intelligence ChatGPT to generate walking routes or put together a table with travel tips.

From a three-week tour of Thailand to a short walk through Hamburg: ChatGPT seems to know its stuff. A threat to tourist experts like Foster? At Hamburg’s Rathausmarkt, the city guide tests the program – with the following “prompt” (ie the instruction): “Tell me a walking tour of Hamburg that takes one hour.”

The request is still quite general, and the answers are just as general – start at Rathausmarkt, continue to Jungfernstieg, along the InnerAlster, return via Mönckebergstrasse and then to the main station to admire its”impressive architecture”. Foster thinks that you can do that, but it’s pretty standard. Is there anything more that can be done?

Statues added at the town hall

Travel planning with ChatGPT is still an insider tip, but is already being used by influencers and travel bloggers. Influencer Diana zurLöwen, for example, recently used the tool to plan a trip to London. Her tip:define specifically what interests you have and what are no-go criteria on a trip.

The more ChatGPT knows about your profile and travel wishes, the better it can respond. It doesn’t have to be a question: “You can also ask counter-questions on ChatGPT, so that you can really have a whole conversation,” says zur Löwen. “It’s really worth trying it out bit by bit.”

City guide Foster is testing such a conversation with the AI at Hamburg City Hall and wants to know from ChatGPT what the statues on the outside facade mean. The answer comes promptly, but is disappointing: of the five figures mentioned, the artificial intelligence has only correctly identified one, but also adds new figures.

For example, the long-time city guide has not yet been able to spot Karl Marx on the facade, and an inquiry to the tourist office also shows that no one here has ever heard of Karl Marx. The digital travel companion is still prone to errors. Influencer zur Löwen advises checking the tips “or just using them as a kind of basis and then thinking about it a bit yourself and checking again.”

TUI plans to use the technology soon

Despite the susceptibility to errors of artificial intelligence, the travel industry is closely monitoring developments and is already developing its own ideas on how the technology could be used. “I see that test projects are being called for everywhere, that there is great curiosity everywhere,” says the chairman of the German Travel Association’s Committee for Digitalization, Oliver Rengelshausen. The topic is being discussed at conferences, association members are being trained, and ideas are being debated.

Some ideas are soon to be implemented at the tourism group TUI. Christian Rapp is the TUI Group’s press spokesman for technology issues and reports, among other things, on AI projects for travel agencies: “In the Netherlands, we are looking at how we can help travel advisors in travel agencies find information more quickly within our own internal information systems.”

The aim is not to replace workers in travel agencies, but AI can help them access information more quickly. The expectation is “that certain tasks will become easier and can be completed more quickly, so that our colleagues in travel agencies actually have more time for what their actual job is: providing personal advice to customers.”

Elbphilharmonie as an “insider tip”

City guide Foster is not worried that he could become replaceable with his Hamburg tours – when he returns from the ChatGPT round, he points to a bright yellow umbrella and a group of tourists in front of Hamburg City Hall: a city tour by a colleague from “Robin and the Tourguides”. Well attended. Foster believes that this personal contact remains irreplaceable.

And: Chat GPT has not yet convinced him; important information was missing from the short tour, mistakes crept in when asking questions, and the route was planned in a somewhat impractical way. Perhaps a tool for getting started in a new city? “You might get a first glimpse of a city you don’t know,” he says.

At the very end, it tests again whether a very precise query might produce better results: What insider tip does ChatGPT have for lovers of classical music in Hamburg? The answer is sobering: The Elbphilharmonie is recommended as an insider tip. But then the artificial intelligence also suggests concerts at the Hamburg University of Music, for example, which are actually more of an insider tip. But travelers will probably still have to be a little patient with the artificial intelligence and experiment a lot.

Embracing the future of AI or watching The Terminator with a sense of foreboding, the rapid rise of ChatGPT cannot be ignored. The platform, owned by OpenAI, allows users to converse with an AI-powered chatbot and gained over 100 million users in three months after its launch in late 2022, sparking controversy. (The number of users fell for the first time in June 2023, indicating decreased initial interest.)

ChatGPT’s rise has forced society to face questions about the role of artificial intelligence. Companies like Bing, Expedia, and Matador Network have quickly adopted AI in travel planning tools.

As someone interested in tech, I feel both doubtful and open-minded about AI’s future. As a travel editor, I wondered if ChatGPT could create a comprehensive travel itinerary or something more concerning.

So I had ChatGPT plan a weekend trip to Washington, D.C., a destination I wasn’t familiar with.

I planned to stay at the Waldorf Astoria Washington D.C., near major attractions like the National Mall, the U.S. Capitol, and the White House. (Although the White House, a recognizable U.S. building, was noticeably absent from my itinerary.) My trip was entirely at the mercy of the robot.

Here’s what I learned about using ChatGPT to plan a trip and if I’d use it again as a travel tool.

If planning is your favorite part of travel, using ChatGPT might take away the excitement of the discovery phase. Researching a destination, browsing social media for recommendations, and scouring Google Maps for hidden gems is what excites me about a trip. With ChatGPT, I missed out on this preparation phase and felt disconnected from my itinerary. The anticipation and payoff I usually get from visiting a new place were essentially absent with ChatGPT as the planner.

ChatGPT can help you get organized if used correctly. AI can be a solid planning partner for the travel planning enthusiasts. For example, ChatGPT was the “big picture” guy for major stops while I managed the detailed itinerary during my trip to D.C. In another instance, asking ChatGPT to create a logical route for my trip to Iceland’s Westfjords helped me get organized. In this case, I was the big-picture planner and ChatGPT helped with the details.

Using ChatGPT takes practice. Like any tool, mastering ChatGPT will take time, and crafting a query that covers all bases may take a couple of tries. Your opinion of the tool will depend on your patience level. For some, it may be a fun puzzle to solve, while for others, it may become tedious, especially with the need to fact-check and adjust the schedule. Being specific with your ask will help ChatGPT tailor an itinerary to your needs. Details such as travel dates, interests, accommodation, budget, group size, and if it’s your first time visiting the destination are essential.

Suppose, for instance, here was my final request for planning my trip to Washington, D.C.:

Hello ChatGPT! My partner and I plan to visit Washington, D.C. from July 6 to July 8. Can you put together a 2-day travel plan for us that includes restaurants, bars, and places of interest based on the details below?

- This will be our first time in D.C.

- We’ll be staying at the Waldorf Astoria DC.

- Our arrival is scheduled for 1 p.m. on July 6, and we’ll be leaving at 4 p.m. on July 8.

- We are in our mid-20s and are enthusiastic about art, history, food, and music.

Please be aware that ChatGPT might not always provide accurate information, so additional research is required.

Using ChatGPT as a travel planner has its downsides, mainly due to the possibility of inaccurate information. The latest model, ChatGPT-4, which is available at a cost of $20 per month, was last updated in March 2023, while the free version has not been updated since September 2021. This means that a suggested itinerary may include closed businesses or outdated entrance fees and hours of operation.

It’s also important to note that ChatGPT is not adept at factoring in travel times or creating an efficient timetable unless specifically requested. During this trip, I found myself moving between neighborhoods rather than following a logical itinerary. While travel times of 20-30 minutes on the train here and there may not seem significant, they can quickly accumulate, causing disruptions to your schedule and potentially leading to fatigue.

While ChatGPT can provide decent recommendations, it is essential to verify opening hours, ticket availability, reservations, and potential impacts of factors such as local holidays or temporary closures on your travel plans. (I discovered this the hard way when I arrived at the African American Civil War Museum in D.C.’s sweltering midsummer heat, only to find the indoor exhibition had been closed for renovations since March.)

At the end of each itinerary generated by ChatGPT, there is a reminder that all itineraries should be fact-checked. However, if you miss this warning or choose to trust the AI without reservations, you may end up with an itinerary that overpromises and underdelivers.

ChatGPT ensures that you cover the essentials . . .

One thing that can almost be guaranteed with ChatGPT is that you won’t miss out on the must-see attractions. Except for the White House, my itinerary included the major attractions that any first-time visitor to the nation’s capital would want to visit, such as the Smithsonian Institute, the National Mall, the African American Civil War Memorial, the Library of Congress, and the Capitol Building. In addition to the major tourist attractions, D.C. institutions like Ben’s Chili Bowl and the 9:30 Club, an iconic music venue that has been around for decades, were also included in the list.

While none of these recommendations were surprising, I felt that I was making the most of my relatively limited time in the city. If your goal is to see the highlights, ChatGPT will prioritize getting you there.

. . . but more interesting recommendations and advice are likely to come from a human

Apart from the essential stops, the bars and restaurants suggested by ChatGPT were good, but not exceptional. I did not come away convinced that AI can rival, or even match, recommendations from another human, whether through word of mouth, a travel website, or a Reddit thread on “Best things to do in ____?”

One of my friends, who visits the capital several times a year, mentioned that ChatGPT’s list was fairly good “for people who are only going to go to D.C. once and aren’t looking for any niche experiences” and shared a few suggestions that I found more appealing from the outset.

Another friend, who currently resides in D.C., noted that the itinerary seemed too packed to be enjoyable, and the order of the itinerary “was not ideal in terms of economical travel,” two major points that I also observed.

Overall, seeking recommendations from a person, especially someone you trust to provide solid suggestions, seems to offer a higher likelihood of discovering new openings, local favorites, or hidden gems compared to asking a bot for suggestions.

ChatGPT does not account for the “human element”

It’s rather obvious, but worth stating that ChatGPT is not human and therefore cannot consider the “human element”—those small factors that can derail travel plans. It cannot anticipate how worn out you might be after going from one attraction to another, or the impact of crowds, or sudden changes in weather such as summer heat or rain that could render an itinerary full of outdoor activities impractical. Even if you are initially satisfied with your itinerary, it’s wise to have a backup plan in case the ChatGPT-generated plan goes off track.

My verdict on using ChatGPT for trip planning

As AI travel tools advance, I will continue to test future technology, but at present, I probably wouldn’t use ChatGPT to plan a trip again. Despite lukewarm recommendations and the so-called “human elements,” I found that I invested as much time in crafting a query, fact-checking, and adjusting my schedule as I would have if I had created an itinerary entirely on my own—minus the usual enjoyment of planning a trip by myself.

In the not-so-distant future of AI-powered technology, a vacation might kick off by telling your phone something like: “I want to go to Los Angeles for a four-day trip in June, when airfares and hotel rates are most favorable, utilizing loyalty rewards points. I’d like to visit a history museum and an amusement park and have dinner reservations at 7 p.m. near the hotel at a restaurant offering vegan options and a great wine list.” And voila, your phone generates the perfect itinerary.

However, for now, travelers using ChatGPT—the powerful new A.I. software already dabbling in creative cocktail recipes and crafting college papers—may need to manage their expectations.

Oded Battat, general manager at Traveland, a Bridgeport, Conn. travel agency, tried out ChatGPT to find potential excursions for clients traveling to Tuscany as part of his work. He received a list of 14 activities, from winery tours to museum visits, with a suggestion to enjoy gelato in the town square of the medieval hill town San Gimignano. “I was already familiar with all these things,” Mr. Battat remarked, but ChatGPT spared him the trouble of compiling the information and presented it in a format he could easily email to a client.

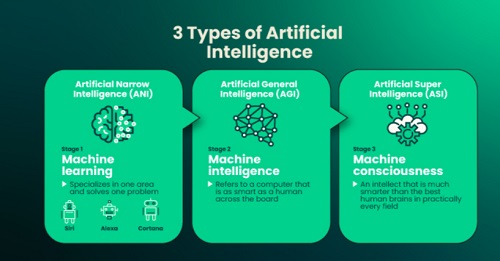

ChatGPT, the service Mr. Battat started using, made its debut in November and has already begun to revolutionize tech-driven industries, including travel. Distinct from the A.I. most consumers are accustomed to—think website chatbots—ChatGPT is “generative,” capable of analyzing or summarizing content from an extensive array of information sources, such as web pages, books, and other literature available on the internet, and using that information to create new, original content. Its sophisticated natural language capabilities enable it to understand and respond more conversationally.

Numerous applications, as well as limitations

The travel industry may undergo a significant transformation. Already, travelers can interact with the system, sharing details like their destination, time of year, and interests, and in return receive a personalized itinerary complete with vibrant descriptions.

A recent request from a reporter for a two-day itinerary to Whistler, British Columbia resulted in ideas such as guided snowshoeing to observe local flora and fauna and a dog-sled ride “with a team of beautiful huskies” for a winter trip. Upon adding further preferences, like a craving for Thai food, ChatGPT adapts its suggestions, providing new restaurant recommendations based on these specifications.

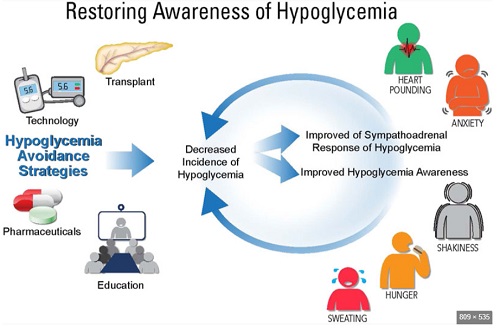

However, ChatGPT does have its limitations. Initially, its information database only extends to 2021, and it lacks access to critical, real-time travel-related data, such as airline schedules and weather forecasts. New versions are currently in development, with a major upgrade released recently, and further improvements are expected. Additionally, the software doesn’t always discern between reliable and unreliable internet information, sometimes producing inaccurate responses. OpenAI, the creator of ChatGPT, also warns that the software may occasionally yield “biased content.”

The software is available for anyone to use, accessible for free through the OpenAI website. Tourist bureaus can engage ChatGPT to produce marketing content describing must-see attractions, while travel advisors can utilize it to compose emails to their clients and create social media posts. Airlines, hotels, and rental car companies could integrate it to enhance their virtual agents’ ability to handle a broader range of queries.

One travel advisor mentioned using ChatGPT to craft a “firm but amicable breakup letter” to a client with whom she no longer wished to work. Although the advisor had to refine the prompt (the term for a ChatGPT question or command) a few times to achieve her desired outcome, ultimately, it was successful. “My client said she understood and wasn’t upset with me,” mentioned the advisor, who opted to remain anonymous as she didn’t want her former client to know that ChatGPT had crafted the letter.

A ‘significant new step’

Some individuals in the industry are concerned that advancements in systems like ChatGPT may lead to the displacement of travel advisers, according to Chad Burt, co-president of OutsideAgents, a company based in Jacksonville, Florida, with a network of 8,000 advisers. However, Burt believes that the downfall of travel agents has been anticipated before, and each new technology is simply a tool that can be utilized. He recently conducted a tech tips seminar for his advisers and is in the process of compiling a list of prompts that his advisers can utilize to maximize the software’s potential.

Burt, who has been experimenting with ChatGPT, has used it to generate over 100 itineraries. He noted that it serves as an excellent starting point and can save time on basic tasks, but he emphasized that a competent agent still needs to verify and enhance it. According to Burt, only a human can accurately discern what travelers indicate they desire versus what they genuinely want. The software achieves around 70 or 80 percent accuracy, but Burt stressed that they aim for superior quality.

Expedia, a major online travel company, has been employing A.I. for a number of years to customize recommendations and to power its online virtual adviser. However, ChatGPT represents a “significant new step,” according to Peter Kern, Expedia’s CEO.

Kern sees the new technology as a potential method for offering customers a more conversational way to engage with Expedia, for example, by speaking or typing queries instead of clicking. Expedia also envisions leveraging ChatGPT to refine personalized recommendations by merging its data with customer purchase history, airline tickets, hotel availability, and rental car prices.

Aylin Caliskan, a computer science professor at the University of Washington, who specializes in machine learning and the societal impact of artificial intelligence, predicts that other travel companies will adopt a similar approach, integrating their own data and programming with generative A.I. systems developed by companies like Google, Amazon, and OpenAI to achieve specific objectives.

According to Caliskan, creating these systems entails significant investment, data, and human effort, making it more efficient to build upon them. For instance, a travel insurance company could develop a system using the natural language capabilities of software like ChatGPT to assist travelers in selecting suitable policies or navigating the claims process.

Generative A.I. could also enhance foreign language translation, facilitating conversations with locals, according to Dr. Caliskan. When combined with virtual reality technology, it could enable travel companies to offer customers a virtual “visit” to a destination using a virtual reality headset, all without leaving their homes.

Concerns regarding an ‘A.I. junk land’

Jeff Low, CEO of Stash Hotels Rewards, a company that offers loyalty points for staying at a group of independent hotels, is concerned about the impact of new A.I. like ChatGPT on the lodging industry. If the potential of artificial intelligence includes automating routine tasks to allow staff to personally connect with guests, Low believes the reality may be different. He mentioned that hotels have been inclined to reduce staff when A.I. was introduced, such as cutting front desk personnel with the popularity of automated check-in. He stressed that personal interaction is a crucial aspect of travel, and that hotels can distinguish themselves through these connections.

Low also worries that unscrupulous companies could exploit software like ChatGPT to devalue guest reviews on travel sites, which many rely on for making hotel choices. This type of software could potentially facilitate more sophisticated fake reviews, even creating traveler profiles to produce seemingly legitimate reviews over a period of time. While travel companies have systems to combat fake reviews, Low raised concerns about the difficulty in distinguishing legitimate reviews from automated ones.

As more travel providers leverage the capabilities of generative A.I., there are potential downsides to consider. According to Burt, natural language responses can sound very authoritative, leading people to place more trust in them than they should. Furthermore, due to Google’s preference for fresh content when ranking search results, companies aiming to boost their online presence may turn to ChatGPT-like software to generate a growing number of blog and social media posts. Burt believes that this trend could potentially lead to an “A.I. junk land” on the internet.

Despite potential issues, AI-powered advancements could greatly benefit travelers. Chekitan Dev, a professor at Cornell University’s Nolan School of Hotel Administration, suggests that if systems like ChatGPT have access to real-time data, they could seamlessly adjust plans in response to sudden changes. For example, if your flight is delayed, the system could automatically postpone your car rental and inform a restaurant of the need to reschedule your reservation.

The future might bring an autonomous vehicle that anticipates your delayed arrival at the airport, takes you sightseeing, and ultimately guides you to the best pad Thai in town. Another possibility is that AI and virtual reality experts team up to create an almost lifelike vacation experience akin to the “Star Trek” Holodeck, allowing us to travel without leaving home, which is an unexplored domain, according to Dr. Dev.

Artificial intelligence has made its presence known and is shaping discussions. Tech companies are racing to develop AI technology for widespread use, with companies like OpenAI launching the AI chatbot, ChatGPT, last fall. The travel industry has been abuzz with speculation about how these platforms will impact future travel planning.

While some in the travel industry worry that AI technology could replace travel advisors, others are embracing it as a means to enhance the travel planning process.

Can AI streamline vacation planning, allowing you to do it all on your own in record time? Will ChatGPT be up to the task, or is working with a travel agent a better option?

Let’s examine the advantages and disadvantages of using ChatGPT for travel planning.

Although AI software has been a hot topic of discussion lately, with ChatGPT leading the way, some people may not be familiar with the platform.

ChatGPT is an AI-driven chatbot and natural language processing tool that engages in human-like conversations based on user-submitted prompts.

For example, if you’re planning your first trip to Accra, Ghana and aren’t sure where to start, ChatGPT can offer instant advice on the best places to stay, eat, party, and explore, as well as tips to help you save money, avoid crowds, and maximize your trip.

It’s important to note that while the chatbot is useful for travel, it’s also a versatile tool for various purposes. Professionals are using ChatGPT to generate content, write essays, and create cover letters for job applications.

The Benefits of ChatGPT for Travel

Previously, finding the best travel destinations and activities in a new location involved sifting through reviews and conducting extensive searches on search engines. ChatGPT now makes this once-time-consuming task virtually effortless, saving time and effort.

Access to a Wealth of Information

Chat GPT’s strength lies in its ability to process vast amounts of information and deliver detailed responses.

With just a few keystrokes, you can quickly compile a list of activities or accommodations. Instead of combing through multiple pages on a booking website, you can simply provide ChatGPT with your criteria, and it will promptly respond, most of the time.

Lightning-Fast Responses

ChatGPT’s real-time responsiveness is impressive and quite engaging. Simple queries can be answered in as little as 10-20 seconds, while more specific requests may take a bit longer.

When Travel Noire asked the chatbot to create a four-day itinerary featuring Black-owned businesses, it provided recommendations for Black-owned restaurants, bookstores, and neighborhoods in just 60 seconds.

Therefore, ChatGPT can save hours of scouring the internet for activity ideas. While some people enjoy the planning process, freeing up time in this manner allows for other tasks.

Detailed Responses Simplify Itinerary Planning

In Travel Noire’s experiment, ChatGPT produced a comprehensive schedule for a four-day trip to Los Angeles, tailored to the request for a Black-owned experience. The suggested itinerary includes soul food restaurants, cultural arts centers, and even schedules each day by morning, afternoon, and evening. It not only contains an exciting list of top Black-owned businesses in LA but also provides brief descriptions for each business.

How do I request ChatGPT to create a travel plan?

To get the best outcomes, make sure to be as precise as possible when asking a question. The more details you can provide to ChatGPT about your inquiry, the better the feedback you’ll receive. Also, don’t hesitate to ask intricate questions. The AI is designed to learn from being tested. It also learns from the continuous queries of each user, so asking more questions is beneficial. Examples of excellent questions to ask include:

- What are some excellent culinary tours in (mention a city/country)?

- Craft the optimal travel plan for (mention a place) within a $600 budget.

- What are some essential foreign expressions to learn when visiting (mention a place)?

- How much money should I budget for excursions in (mention a place)?

- Can you create a road trip itinerary from (mention a place) to (mention a place)?

- What are the top historical attractions to visit in (mention a place)?

- What is the most suitable means of transportation and route for (mention a place)?

Where AI Lacks

AI tools like ChatGPT can support in sifting through the vast array of travel recommendations available on the internet; nevertheless, there are a few noteworthy areas where the technology falls short compared to humans — at least for now.

Good for Planning, Not as Effective for Booking

At present, the current edition of the application has constraints in its booking functionality. Chat GPT is expanding the platform to enable users to make travel arrangements through third-party booking platforms, but for the moment, options are limited. For instance, Expedia now offers a plugin that integrates with ChatGPT, allowing users to convert their chat responses into real travel bookings.

In comparison to working with a travel agent, arranging travel plans is more do-it-yourself than hands-off. Currently, travel agents still have an advantage because personalized customer service from a human cannot be replaced. Collaborating with a travel professional can aid in creating a trip tailored specifically to your preferences. Moreover, in the event of an emergency or change of plans, a travel agent can provide guidance on cancellations or rescheduling.

Although the platform excels in planning, the journey toward a fully automated AI travel experience will be lengthy.

Restricted Planning Abilities

Currently, Chat GPT can only facilitate travel planning through its comprehensive recommendations and integrations with third-party booking services. Unlike interacting with a human, customization based on your individual interests might be limited. The intricacies of your and your group’s travel preferences may not be fully captured within the technological framework.

You might inform the chatbot that your uncle has difficulty walking long distances, so you require a centrally located place. While you might receive a reply with suitable suggestions, working with industry professionals is still preferable for a truly personalized itinerary.

Platform Overload Problems

With its current popularity, occasional traffic surges can lead to chatbot unavailability. The high demand and overwhelming interest can intermittently cause network errors on the site. This situation can be frustrating for individuals seeking travel insights when the site is at capacity.

Undoubtedly, the potential for how ChatGPT can enhance your travel planning is limitless. Consider giving it a try the next time you’re responsible for planning your group’s travels.

If you’re looking to embark on a vacation but are unsure where to begin, OpenAI’s ChatGPT can offer more than just a bit of support. ChatGPT has emerged as one of the most widely used language processing models of the decade, and people are discovering increasingly clever applications for it. It can assist in constructing code for a specific task and troubleshooting faulty code, aids in planning daily schedules, and much more.

Another progressively popular use for ChatGPT is in vacation planning. There are several ways the service can be employed to assist in creating a vacation plan, from giving destination recommendations to aiding in crafting a budget. Prior to getting started, an OpenAI account must be created, which is required to utilize the tool. It is available for free, and users seeking additional features can upgrade to a $20/month subscription plan for added benefits.

Naturally, it’s important to note that ChatGPT’s suggestions serve as a starting point, and all plans should be diligently verified.

Ways ChatGPT Can Massively Boost Your Efficiency

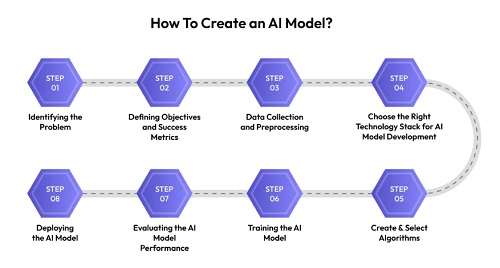

Request ChatGPT To Serve as a Travel Consultant

Engaging ChatGPT to function as a travel consultant

Users should initially request ChatGPT to act as a travel advisor. Since the tool can adapt various conversational styles and tones, asking it to converse like a travel consultant establishes a context and yields pertinent responses. After inputting the prompt, “Assist me in planning my next vacation as a travel advisor,” the tool responds, “Certainly! I would be delighted to aid you in planning your upcoming trip.” Subsequently, the language processing tool poses questions to assist users in planning their vacation, starting with their destination preferences, travel dates, duration, interests, activities, budget, and other pertinent details.

Find a Destination Based on Your Preferences

Inquiring ChatGPT to recommend travel destinations for a vacation

The first thing ChatGPT inquires about is destination preferences, such as where the individual would like to go and if they have a specific country or region in mind. Users can describe the characteristics of the desired destination, even if they are unsure of a particular city or country at the moment. For instance, someone in a warm region might want to spend a few days in a place with a pleasant climate, away from the city’s hustle and bustle.

Users can also specify whether they wish to travel abroad or stay within their own country. They can also convey if they prefer a place with scenic views or a wide range of recreational activities, or if they simply want to relax and savor delicious cuisine throughout the day. If users are unsatisfied with the initial list of recommendations, they can request ChatGPT to suggest alternate destinations.

Get Acquainted with a Destination before Departing

Asking ChatGPT to furnish additional details about a specific vacation destination-1

ChatGPT can also articulate why someone should or shouldn’t visit a particular place based on their interests. For instance, Screen Rant requested the tool to provide more details about Interlaken, Switzerland. In its response, ChatGPT elaborated that the region is among the most beautiful and vibrant places to visit. It highlighted that visitors can engage in adventure sports, hiking, water sports, embark on nearby excursions, and admire the natural Alpine beauty in the area.

Choose the Optimal Time for Your Visit

Asking ChatGPT when is the ideal time to visit a specific location

Upon selecting their destination, ChatGPT can also assist in determining the optimal travel dates, especially if the user’s schedule is flexible. The chatbot can suggest the most favorable time to visit a specific location based on various factors such as climatic conditions, tourist influx, and more. In the given example, the chatbot recommended that the “best time to visit Interlaken and enjoy pleasant climatic conditions is during the summer months from June to August.”

Users can also provide the duration of their upcoming vacation, followed by their preferred dates. ChatGPT will inquire about any specific preferences related to activities that the user would like to incorporate into their itinerary, enabling it to tailor its responses accordingly.

Explore Activities in the Vicinity

Asking ChatGPT about activities to engage in during a vacation

Screen Rant inquired ChatGPT to propose enjoyable activities available in or around the region, particularly those that are safe to partake in with a group of four to five individuals. In its response, the language processing model included activities such as paragliding and skydiving, which cater to thrill seekers. It also suggested other activities like hiking, boat cruising, rafting, and biking along scenic routes in and around Interlaken. ChatGPT also advised users to verify the credentials and reviews of the tour operators organizing these activities for a better understanding of the experiences.

Set Your Budget and Plan Accordingly

Inquire with ChatGPT about the potential expenses for the trip

To kick off your expenditure planning for your vacation, ChatGPT will require the “specific budget range for the vacation.” During this stage, users should input their trip budget or request an estimation about the potential cost of a trip to the specified destination for the mentioned number of travelers. The chatbot will consider factors such as lodging, transportation, activities, dining, and personal preferences. It concluded that the trip might range in cost from $2,500 to $6,000, not including international flights.

The language processing tool can provide a rough estimate of travel costs depending on the preferred mode of transportation, whether it’s public transport or a rented cab. However, it’s worth noting that the chatbot’s database might not be up to date, so it’s advisable to further validate its suggestions with additional research.

Seek Accommodation Advice

Ask ChatGPT for recommendations on accommodations for a trip

ChatGPT can also offer users suggestions for well-known lodging options. Upon asking the chatbot, “please recommend some budget-friendly accommodation in Interlaken, Switzerland,” it presented various options. While it correctly identified the names of the places, the price range it provided differed from the actual rates available online. In the response, ChatGPT indicated that “these are estimated costs and can vary based on factors such as the time of year, availability, and specific room types.” Therefore, while users can gather some recommendations, it’s best to verify this information for a more precise understanding.

Create a Travel Plan

Ask ChatGPT to generate an itinerary for a trip

Finally, request ChatGPT to put together an itinerary containing all the details, including the daily schedule, travel times between locations, and other relevant information. Initially, the tool responds with a paragraph, but users can request it to design a table with multiple columns for ease. Although ChatGPT cannot export the itinerary into a spreadsheet, users can always capture screenshots and obtain a hard copy or save a digital version on their mobile device or tablet.

In this specific example, the itinerary included all the necessary information, but again, some details appeared slightly inaccurate, so users should always double-check. For instance, the language model states that a flight from Lucknow, Uttar Pradesh, India, to Zurich , Switzerland, takes 10 to 12 hours, while the current fastest flight actually takes more than 13 hours. However, the approximate travel time from Zurich to Interlaken is correct.

While it’s not advisable to rely solely on ChatGPT, its suggestions can serve as a helpful starting point for planning a vacation.

The era of navigating numerous websites to arrange travel or plan vacations may soon be outdated due to the increasing use of artificial intelligence tools like ChatGPT.

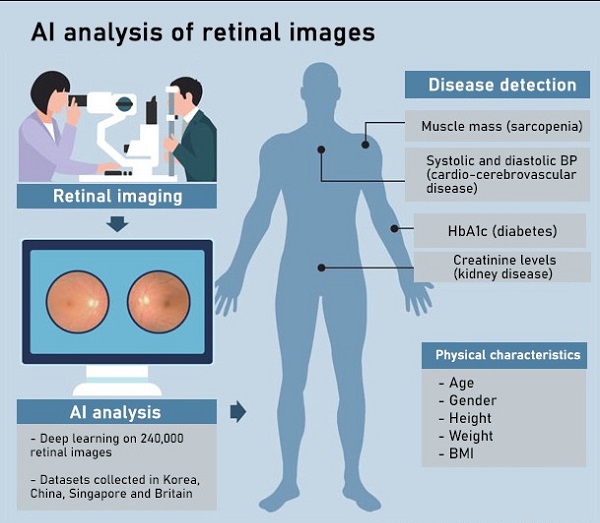

AI can swiftly analyze and gather information from various sources online, delivering responses that resemble those of a human, thereby offering a comprehensive solution for travelers looking to determine attractions, accommodations, and dining options for their journeys, according to Jangwoo Jo, Ph.D., an assistant professor at Metropolitan State University of Denver’s School of Hospitality.

When ChatGPT-4 was launched last year, itinerary planning was highlighted as one of its key features, Jo noted, identifying himself as someone who readily embraces new technology.

“This tool is extremely beneficial for the hospitality and tourism sectors,” he mentioned. “I believe this technology will become widely utilized in our everyday lives, especially for trip or travel planning.”

A significant attribute of large-language models, such as ChatGPT, is their contextual awareness, which means they can grasp the essence of what is being requested, Jo explained. “It comprehends the context: ‘I find myself in this situation. What is a possible solution? What do I need to know?’ This ability enables it to provide tailored travel information.”

“Thanks to context awareness, personalized suggestions that enhance a customer’s experience in the hospitality industry can be optimized,” Jo stated.

To illustrate the capabilities of AI-assisted travel planning in a recent Zoom interview, Jo opened ChatGPT-4o, the latest version of the platform, and posed various questions regarding a hypothetical monthlong trip to his hometown, Seoul, South Korea. The platform generated recommendations covering nearly all aspects of travel.

Flights and attractions

ChatGPT presented a selection of flights from Denver to Seoul found on Expedia, Kayak, and Momondo, and subsequently offered potential lodging options for a monthlong stay. When Jo inquired, “What are the must-see attractions and activities in Seoul during July?” ChatGPT promptly suggested several local historical sites, including the Namsan Tower.

Food and transport

Jo also requested recommendations for restaurants and places to buy cooking supplies, and the platform provided insights on navigating Seoul’s public transportation system.

Jo concluded that ChatGPT-4o was largely accurate. “It has a broad general knowledge of tourist spots,” he remarked.

Language

Finally, he asked, “What are some essential Korean phrases and cultural tips for visiting Seoul?” ChatGPT-4o provided a list of greetings and basic phrases, such as the Korean words for “hello,” “goodbye,” “thank you,” “please,” and “Do you speak English?”

Generative AI models can “understand” as many as 100 languages, enabling them to analyze customer reviews and other written content in those languages, Jo noted.

Booking

Jo did have one exception: “While most of the data is generally reliable, it does not offer a specific option to actually finalize the plans, so you still have to participate in making reservations, processing payments, negotiating prices, and organizing the trip,” he stated.

This could change in future versions of ChatGPT, he suggested, which could pose challenges for online travel platforms like Expedia and Kayak as the AI learns to handle bookings on behalf of users.

“I believe that in the future, generative AI tools will be able to make those reservations and transactions autonomously,” Jo stated. “These online travel agencies are in significant jeopardy. They need to quickly incorporate this AI capability into their systems before AI tools fully integrate online travel services within them.”

When Jason Brown planned his summer vacation to Amsterdam and Ireland this year, he opted not to consult travel books or browse Instagram.

Instead, the founder of recruitment firm People Movers turned to ChatGPT, OpenAI’s generative artificial intelligence tool.

He asked the AI numerous questions to assist in crafting an itinerary for his 10-day trip to Amsterdam and Ireland, covering Dublin and Galway, which he took in July and August with his wife, their two sons aged 20 and 16, and one of their friends.

“In the past, I would always rely on websites like TripAdvisor, but I realized that I had all the information at my disposal [through AI], and it provides results in 15 seconds.” He described the experience as “fantastic.”

“It produced a golf itinerary for Dublin and a four-day plan for the rest of Ireland. It was incredible how it broke it down into morning, afternoon, and evening activities.

“For instance, on the first day, it recommended arriving in the morning, visiting Trinity College and Grafton Street in the afternoon, and then going to Temple Bar in the evening.” Regarding Amsterdam, he noted that it listed key attractions such as the Anne Frank Museum, the Van Gogh Museum, and the Jordaan district. As his trip plans evolved, he continued to refine his queries on ChatGPT.

While he took up many of the AI suggestions, Mr Brown says he still relied on world of mouth recommendations through an online community of people who attended the same college as his, while a friend they visited in Amsterdam showed them around.

“That way we experienced a few things we wouldn’t have found using ChatGPT. But it gives a perfect skeleton of a trip, and gives you everything you need and want to see.”

AI is pervading all areas of our life and travel is no different. As well as ChatGPT there are other generative AI tools such as Google’s Gemini, Microsoft’s Copilot, and dedicated travel AI sites such as Trip Planner and Ask Layla.

It appears to be becoming part of the travel organisation plans for some, with one in 10 Britons having used AI for travel planning, according to a survey by Sainsbury’s Bank Travel Money. One in five said they are likely to use it in the future.

However, the study also suggested that travel AI still has some way to go before it can take on all your holiday plans.

It found that of those who had used AI for travel planning, more than a third (38%) said that it brought up generic answers, 37% said it had missing information, while 30% said it had incorrect information.

While generative AI can help deliver personalised travel itineraries and recommendations, it is only as good as the information it is trained on, and where this information is out of date, biased, erroneous, false and so on, then the AI will perpetuate the misinformation, points out Caroline Bremmer, head of travel and tourism research at analysts Euromonitor International.

“The challenge is ensuring real-time information that is factually correct. There are dangers if consumers do not undertake due diligence to verify the results provided by Gen AI with other sources, including talking to people in the know, such as local residents or travel agents.”

Sardar Bali is the co-founder at Berlin-based AI travel planner and guide Just Ask Layla.

He says accuracy is a key part the service.

“We have internal tools,” says Bali. “All content goes through a two-step verification process, one of which is more automated, and we have a more manual process where internal teams look at different content and researches it a bit.”

But he admits some content “might slip through”.

“For example, it once mentioned an Eiffel Tower in Beijing; it might be tagged incorrectly. But it’s getting better and better every day.”

That improvement is likely to come, particularly as more services come online.

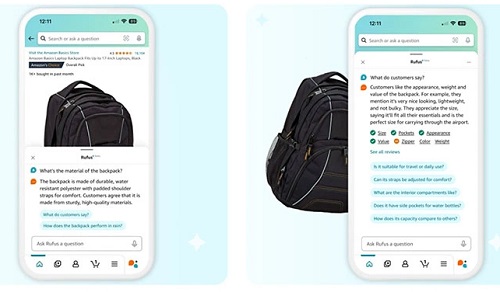

Earlier this year, travel giant Expedia launched an AI service for US customers. Called Romie, it’s part of the company’s iPhone app.

“A trip can involve complex planning… there’s gazillions of options,” says Shiyi Pickrell, senior vice president of data and AI at Expedia Group.

She says Romie can help narrow down the choice of destination, and compare different locations. If you want a beach theme, it can compare British beach destinations to Spain and France for example, or look at which ones are family-friendly.

However, AI doesn’t always go to plan.

Rebecca Crowe, 29, a freelance writer living in Liverpool, says she often taps into AI to help plan her trips, but proceeds with caution after several unhelpful experiences including a trip to Lecco, a town located next to Lake Como in Italy.

“The experience wasn’t great,” says Crowe. “It listed all the popular stuff to do that you’d find with a standard Google search, and the itineraries didn’t make a lot of logical sense.

“They tried to have us in Milan in the morning and Bellagio in the afternoon, and with the train timetables and ferry schedules, this would not really be feasible. It then had us back in Milan the following day to explore more. Following this itinerary, we’d have spent more time on transport than anything else.”

She’s also referred to AI to find gluten-free restaurants when travelling with a friend who has coeliac disease.

“This pulled back results that were massively out of date and just wrong in some cases. I found myself having to manually cross-reference each suggestion to see if the place was even still open.

“If I’m looking for seasonal things like ferry timetables in the shoulder season [months around the peak season], AI just doesn’t seem to be up-to-date and accurate enough. Same for museums with seasonal opening times.”

Instead she advises people to only use it as a sounding board for broad inspiration. “You can find blogs and websites with complete guides and itineraries that are a lot more reliable and up-to-date. If you want a rough idea of things to do in a certain city, it’s a great jumping-off point, but the amount of fact-checking it requires means that it doesn’t really save you much time in the long run.”

Organizing a getaway should ideally be enjoyable. However, compiling a list of activities for a journey can also prove to be time-consuming and stressful, especially if you’re uncertain about where to start.

Fortunately, technology companies have been vying to develop tools that assist with that. Travel has emerged as one of the most favored applications for AI, which Google, Microsoft, and OpenAI prominently highlight in their demonstrations, while companies like Tripadvisor, Expedia, and Booking.com have begun to introduce AI-driven vacation-planning solutions as well. Although fully automated AI agents that can oversee every aspect of planning and booking your vacation are still not quite here, the current generation of AI tools is still quite effective at assisting with various tasks, such as creating itineraries or enhancing your language abilities.

AI models can sometimes generate inaccurate information, so it’s essential to verify their recommendations yourself. Nonetheless, they can still serve as a valuable resource. Continue reading for some suggestions on how AI tools can simplify your planning process, giving you more leisure time to enjoy your trip.

Determine possible destinations for your getaway

First and foremost: you must decide where to go. The advantage of large language models (LLMs) like ChatGPT is that they are trained on extensive amounts of internet data, allowing them to process information that would take humans hours to research and quickly summarize it into straightforward paragraphs.

This makes them excellent resources for generating a list of potential places you might want to visit. The more detailed you are in your request, the better—for instance, informing the chatbot that you’re looking for recommendations for destinations with warm weather, family-friendly beaches, and vibrant nightlife (like Mexico, Thailand, Ibiza, and Australia) will yield more applicable options than ambiguous requests.

However, given AI models’ tendency to produce incorrect information—referred to as hallucinating—it’s advisable to verify that their details about suggested locations and potential activities are indeed correct.

How to utilize it: Activate your preferred LLM—ChatGPT, Gemini, or Copilot are a few available models—and request it to recommend travel destinations. Include key information such as desired temperatures, locations, duration of stay, and activities of interest. An example would be: “Provide a list of destinations for two travelers embarking on a two-week vacation. The locations should be warm during July and August, situated in a city but easily accessible to a beach.”

Select attractions to explore while you’re there

Once you’re on holiday, you can use platforms like ChatGPT or Google’s Gemini to create day trip itineraries. For instance, you might use a request such as “Create an itinerary for a day of driving through the countryside around Florence in Chianti. Include several medieval villages and a winery, and conclude with dinner at a restaurant that has a nice view.” As with LLMs, being as detailed as possible enhances outcomes. To be cautious, it’s wise to cross-check the final itinerary with Google Maps to ensure that the suggested order is logical.

In addition to LLMs, there are also specialized tools that can assist you in assessing the types of conditions you may face, including weather and traffic. If you’re planning an urban getaway, you might want to explore Immersive View, a feature Google Maps introduced last year. It employs AI and computer vision to create a 3D representation showing how a specific spot in a supported city will look at a particular time of day up to four days in advance. By leveraging weather forecasts and traffic information, it can help you determine whether a rooftop bar will be sunny tomorrow evening or if choosing an alternate route for a weekend drive would be wiser.

How to utilize it: Verify if your city is included in this list. Then, open Google Maps, navigate to your area of interest, and select Immersive View. You’ll see an interactive map with options to adjust the date and time of day you wish to examine.

Checking flights and lodging

After deciding on your destination, the next step is to book your flights and accommodations. Many travel booking platforms have incorporated AI chatbots into their services, most of which utilize ChatGPT technology. However, unless you’re particularly loyal to a specific site, it might be beneficial to consider a broader perspective.

Searching for flights across multiple browser tabs can be tedious, but Google’s Gemini offers a solution. This model connects with Google Flights and Google Hotels, providing real-time information from Google’s partner companies, making it simple to compare both travel times and, importantly, costs.

This method provides a straightforward way to look for flights and lodging within your budget. For instance, I instructed Gemini to find me round trip flights from London to Paris for no more than £200. This serves as an excellent starting point to gauge your potential expenses and travel duration.

How to utilize it: Once you access Gemini (you might need to log into a Google account), open Settings and go to Extensions to ensure Google Flights & Hotels is activated. Then, return to the Gemini main page and input your request, detailing your departure and arrival locations, the duration of your visit, and any budget constraints you want to include.

If you love using spreadsheets, you can ask Gemini to export your itinerary to Sheets, which you can later share with family and friends.

Enhance your language abilities

You may have heard that practicing speaking is the best way to improve in a foreign language. However, hiring tutors can be costly, and you might not have anyone in your circle fluent in the language you’re aiming to enhance.

In September of the previous year, OpenAI upgraded ChatGPT to enable users to converse with it through speech. You can experience this for yourself by using the ChatGPT app available for Android or iOS. I opened the voice chat feature and recited some basic phrases in French, which it accurately translated into English (“Do you speak English?” “Can you help me?” and “Where is the museum?”) despite my lackluster pronunciation. It was also effective at providing alternative expressions when I requested less formal versions, such as replacing bonjour (hello) with salut, which means “hi.” Additionally, I was able to engage in basic dialogues with the AI voice.

How to use it: Download the ChatGPT application and tap on the headphone icon located beside the search bar. This will initiate a voice conversation with the AI model.

Translate while you’re out

Google has seamlessly integrated its robust translation technology into its camera features, allowing users to simply direct their phone camera at an unfamiliar phrase to see it converted to English. This is especially useful for understanding menus, road signs, and store names while exploring.

How to use it: Download the Google Translate application and select the Camera option.

Craft online reviews (and social media captions)

Positive feedback is an excellent way for small businesses to differentiate themselves from their online competitors. However, composing these reviews can be time-consuming, so why not utilize AI for assistance?

How to use it: By informing a chatbot like Gemini, Copilot, or ChatGPT about what you enjoyed regarding a specific restaurant, guided tour, or destination, you can simplify the process of writing a brief summary. The more detailed you are, the better the output will be. Prompt the model with something like: “Write a positive review for the Old Tavern in Mykonos, Greece, that mentions its delicious calamari.” While you may not want to use the response verbatim, it can certainly help with the structure and wording of your review.

Likewise, if you find it challenging to come up with captions for your travel-related Instagram posts, asking the same language models for help can be an effective way to overcome writer’s block.