From steam engines to assembly lines with conveyor belts and factory robots, the manufacturing sector has consistently been at the […]

Author: FredMT Admin

Artificial Intelligence (AI) has emerged as a game-changing technology in the high-stakes realm of player scouting and recruitment

More and more football clubs are relying on artificial intelligence when looking for new players. This can create scores for […]

Innovation in a crisis: Why it is more critical than ever

A recent analysis of scientific articles and patents from previous decades suggests that major discoveries in science are becoming less […]

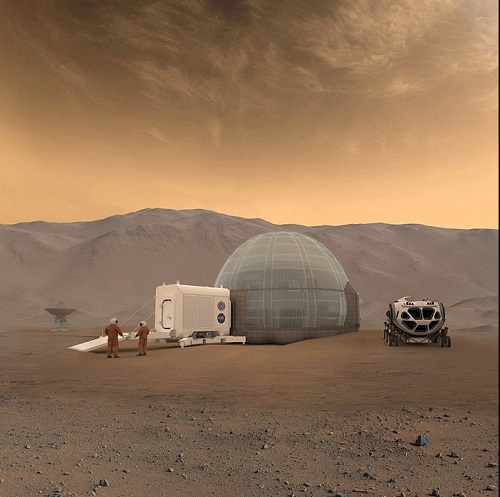

How did the AI robot find the way to produce oxygen from water on Mars?

Oxygen on Mars? A Chinese robot could search for the optimal production method on the red planet completely autonomously. Artificial […]

Can psychological tests uncover personality traits and ethical inclinations in AI models?

Psychology is a field of study that focuses on understanding people’s actions, feelings, attitudes, thoughts, and emotions. Although human behavior […]

In England, an AI chatbot is being used to help individuals struggling to find a psychotherapy placement

In England, an AI chatbot is being used to help people find a psychotherapy place, and according to an analysis, […]

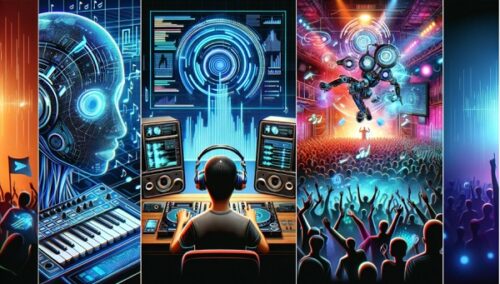

The field of AI music has seen rapid advancement in recent years

Artificial intelligence is making its way into various aspects of daily life, including music composition. Universal Music is now seeking […]

The energy consumption of AI tools is substantial and on the rise

The use of artificial intelligence is growing, leading to increased energy demands in data centers. Experts warn that the electricity […]

Experts from research, science and the tech industry called for a pause in the development of artificial intelligence

The rapid development of artificial intelligence is attracting criticism. More than 1,000 experts from tech and research-including Elon Musk – […]

Artificial intelligence (AI) could majorly impact the tourism industry

Artificial intelligence (AI) could majorly impact the tourism industry. Will holiday recommendations and personalized excursion suggestions become the norm? What […]

Another job lost to AI. How many more jobs are in danger?

AI is rapidly evolving and impacting various aspects of contemporary life, but some specialists are concerned about its potential misuse […]

The AI boom is causing chip company Nvidia’s business to grow explosively

he artificial intelligence helped the chip company Nvidia achieve excellent business figures. The chip company is the largest provider of […]

How do smart cars use AI?

It appears that discussions, debates, and subtle signals related to generative AI are everywhere these days. The automotive industry, like […]

Tesla’s Next Move 2025: Model 2 Or Model Y Juniper? Wait and See

Tesla has always been clear – making cars is, for Musk’s marque, more a crusade than a commercial enterprise. Its […]

Intel’s missed opportunity laid the foundation for the success of the British company Advanced Risc Machines (Arm)

Arm makes its debut on the New York Stock Exchange today. The chip designer’s technology is found in practically every […]

In the future, strict rules for the use of artificialintelligence will apply in the EU

In the future, strict rules for the use of artificial intelligence will apply in the EU. The law is important, […]

How is AI changing the workplace?

Artificial intelligence (AI) technology is changing the world: It can write presentations, advertising texts, or program codes in seconds. Many people […]

How is Artificial intelligenceAI being used in the military and security?

Artificial intelligence (AI) is considered a topic of the future. But in some companies and industries, it is already part […]

What are the benefits of developing AI in healthcare?

Malnutrition, delirium, cancer – with all of these diagnoses, doctors in a New York hospital receive support from artificial intelligence. […]

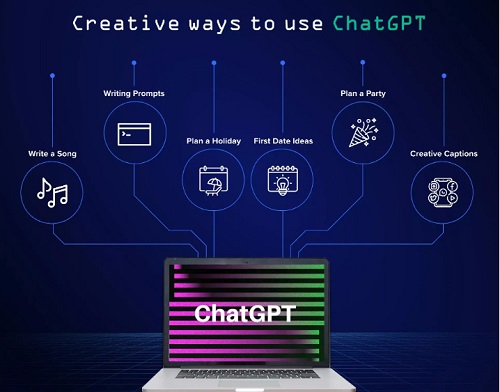

The publication of the chatbot ChatGPT

So far, users can only communicate with the ChatGPT bot using the keyboard. But that could change. Real conversations or […]