Rimma Iontel from Red Hat talks about how the company has ramped up investments in OpenShift Virtualization and what lies […]

Author: FredMT Admin

Nvidia ‘opening’ of AI RAN risks upheaval for Ericsson and Nokia

Data exchanges in contemporary 5G networks utilize pilot signals to assess conditions. They function like scouts sent to investigate the […]

Why do so many virtual assistants have female voices?

Is your smartphone biased against women? The emergence of voice assistant technology—and long-standing disparities regarding gender in the tech sector—has […]

How Your Brain May One Day Control Your Computer

The mouse that depletes its battery. The trackpad that becomes unresponsive and fails to react to a gliding finger. The […]

Asia drives budget 5G smartphone boom

There’s significant enthusiasm currently surrounding the latest premium smartphones from brands like Apple and Huawei, but what about the low-end […]

5G Devices Market Size & Share Analysis – Growth Trends & Forecasts (2025 – 2030)

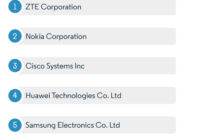

The Global 5G Equipment Market is categorized by form factor (including Modules, CPE (Indoor/Outdoor), Smartphones, Hotspots, Laptops, Industrial Grade CPE/Router/Gateway), […]

How to Choose Game Servers?

Choosing the right game server hosting is crucial for delivering the best experience to your players. An inadequate server infrastructure […]

Survival Servers Review – The Pros and Cons

Survival Servers is a top-tier game server hosting provider that focuses on delivering high-performance and dependable hosting solutions for gamers. […]

Minecraft : How to play, strategies, tips and tricks for beginners

Minecraft is regarded as one of the most groundbreaking and innovative video games ever created. Developed by Markus “Notch” Persson […]

Fun Minecraft Tips and Tricks: Elevate Your Gameplay

You’re a fan of Minecraft, but occasionally it feels like you’re just beginning to explore its potential. You come across […]

The social media platform is changing the face of travel as we know it

From engaging videos that highlight less popular destinations to tips on packing and transportation, TikTok has become an essential source […]

Verizon customers will soon see yet another fee increase on their next bill

Verizon subscribers will soon experience yet another increase in fees on their upcoming bills, a common tactic among wireless providers. […]

Would you travel by flying taxi?

The opportunity to glide above congestion in some of the globe’s most prominent cities feels like a long-anticipated glimpse into […]

The future of air mobility: Electric aircraft and flying taxis

Toyota is allocating an additional $500 million towards Joby Aviation to assist the air taxi startup with the certification and […]

Jaguar’s new concept car divides opinion

The luxury automobile manufacturer Jaguar has introduced its latest electric concept vehicle, which has sparked mixed reactions, similar to a […]

Ford’s UK boss has called on the government to provide consumer incentives

Ford’s UK leader is urging the government to introduce consumer incentives of up to £5,000 per vehicle to increase the […]

UK electric car sales hit a record high in September

Sales of electric vehicles in the UK reached an all-time high in September, despite executives from major automakers expressing concerns […]

AI and human collaboration working together to make the internet safer

The countless pieces of digital content uploaded to online platforms and websites every day make content moderation an essential yet […]

Marvell Technology stock was up 12% before the bell on Wednesday

Chipmaker Marvell Technology (MRVL) projected fourth-quarter revenue exceeding expectations on Tuesday, driven by strong demand for its specialized artificial intelligence […]

Sell Chrome to end search monopoly, Google told

Google is facing significant challenges. As my colleague Dan Milmo reported, the US Department of Justice “has proposed a comprehensive […]